A complete SEO guide for web developers written by an SEO expert working with web developers on a daily basis, focused on URLs, robots, sitemap, errors, code validation, site speed and more!

So you’re working on a new website. Finish the job and then move on to SEO, right? Wrong! SEO needs to be a vital part of your web development project now so that you can avoid major issues with your search results later. In extreme cases, you may need to even redevelop the site. Take the time to collaborate with your SEO colleagues or hire an agency of SEO pros before you start coding.

SEO practices can be divided into three main topics: on-page, indexing, and off-page. On-page and indexing practices–also known as Technical SEO– focus on optimizing the HTML, JavaScript and CSS source codes, images and content, while off-site SEO mainly refers to gaining links from other websites. This guide focuses specifically on Technical SEO.

SEO-Friendly Site Structure & URLs

The site structure of a website should follow a pyramid structure, with all inner links being accessible within 2 to 3 clicks from the homepage. From an SEO standpoint, creating a site structure with an extensive crawl depth (over 3 clicks from the homepage) can seriously damage optimization efforts. That’s because users and search engine crawlers are less likely to reach pages buried too deep in the website. Another factor to keep a close eye on is links interconnectivity or internal linking – web pages that are not linked from anywhere in the site will be overlooked by search engines and Internet users alike.

URLs should reflect the site’s structure and content of each page, and using SEO friendly web addresses will enhance the readability of the URLs for both the website’s audience and search engine crawlers. While most CMSs automatically generate URLs, web developers should follow these best practices:

- Use static URLs instead of dynamically generated URLs

- Use descriptive keywords and no confusing IDs

- Separate words using hyphens instead of underscores

- Avoid using Uppercase and special characters, including non-ASCII Characters

- Enforce a common policy for the trailing slashes

- Consolidate URLs serving duplicate content using rel=”canonical”

- Consolidate the www./non-www. and HTTP/HTTPS versions of each URL

SEO Friendly URL: https://www.my-site.com/product-name-keyword Non-SEO Friendly URL: https://www.my-site.com/product/B07BPD2TMd/ref=oh_aui_detailpage_o01_s00?ie=UTF8&psc=1

SEO Best Practices for HTTPS vs. HTTP and www vs. non-www

Google strongly recommends that web developers use HTTPS encryption by default in order to increase the overall security of the World Wide Web. Moreover, using a secured protocol for transferring data between the Internet users’ browser and the website is an SEO ranking signal.

On the other hand, from an SEO perspective, there is no difference between using www vs. non-www in URLs. However, when developing large websites — including websites with several subdomains and/or cloud-hosted sites — www is considered by most to be the best option, because of caching and cookie-ing users. It is also vital to use 301 permanent redirect rules to redirect the non-preferred domain to the preferred domain.

SEO friendly URL: https://www.my-site.com/page, with the following versions redirecting to it: – http://my-site.com/pag – http://www.my-site.com/page – https://my-site.com/page Non-SEO friendly URL: the /page webpage can be accessed through multiple URLs

Optimizing the Sitemap.xml File(s)

While most CMS automatically generate the Sitemap.xml file, or multiple Sitemap files dedicated to different types of content, web developers should be aware of the following best practices:

- Enable the priority attribute to set the importance of each page

- Eliminate non-canonical URLs from the sitemap

- Eliminate all pages with an HTTP status code different from 200 from the sitemap

How HTTP Status Codes Affect SEO

HTTP Status codes are issued by a web server in response to a client’s request made to the server. From an SEO perspective, websites should only include 200 OK Status Code pages–the standard response for successful HTTP requests. But we all know that changes happen and 301 Moved Permanently Status Code internal pages are bound to appear.

The 404 Not Found status code is generated when the server cannot find a requested URL. Broken internal links should be kept to a minimum (if not completely eliminated) as they damage user experience and negatively impact search engine rankings; 404s signal to crawlers that the website is poorly maintained or coded. In short, all 404 Not Found pages should be removed from the site and redirected to another, similar resource.

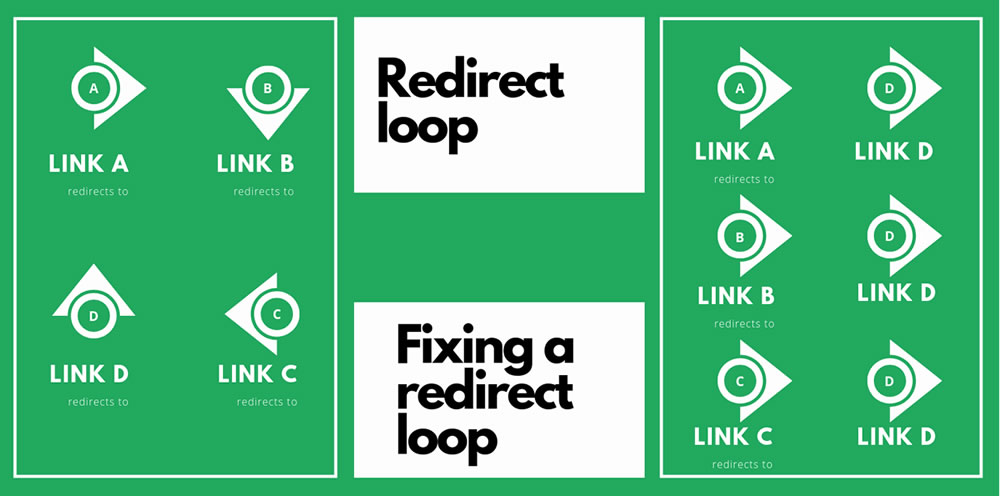

While using permanent redirects (301, 302 or 308) is viable in most situations, the optimal process is to do them correctly and to keep them to a reasonable minimum on-site. Two of the most common examples of incorrect redirect usage are redirect loops and chains, which have a negative impact on user experience, crawl budget, site speed, and consequently, rankings.

Customizing & Optimizing the Robots.txt File & Meta Robots Tag

robots.txt file is a text file used for instructing search engines through allow & disallow rules on how to crawl the website’s pages. Unless crawlers are causing severe server load issues, developers should not limit the crawl rate using the robots.txt file.

Developers should also include the location of the sitemap or sitemaps associated with the domain in the robots.txt file:

Sitemap: https://www.my-site.com/sitemap.xml

And while search engines will not crawl or index content blocked through a disallow rule created in the robots.txt file, search engines can still find disallowed content in other places on the Internet and still index it.

The meta robots tag must be used instead, through the parameter noindex, which instructs search engines not to index the page, and not to display it in search results.

For preventing PDF files from being indexed and rendered in search results, use the X-Robots-Tag instead, by editing the .htaccess file associated with the domain.

Learn more about robots.txt and how to customize it here.

Handling Multilingual Websites (Subdomain? Subdirectories? ccTLD?)

When developing multilingual websites, SEO best practices say developers should use local-specific URLs along with the hreflang attribute and sitemaps that indicate which pages apply to which locations or languages. Avoid using IP analysis to automatically redirect the user to the preferred version of the site based on the user’s perceived language.

Locale-specific URLs translate in simply keeping the content for each language on separate URLs, with the following SEO-friendly options:

| URL structure | Example URL | Main Pro | Main Con |

| Country-specific domain | my-site.de | Clear geotargeting | Expensive |

| Subdomains with gTLD | de.my-site.com | Easy separation of sites | Unclear geotargeting from the URL alone (is “de” the language or country?) |

| Subdirectories with gTLD | my-site.com/de/ | Same host (low maintenance) | Separation of sites harder |

Always add hyperlinks to other language versions of a page to give users the option to click on their preferred language.

The value within an hreflang attribute identifies the language (e.g. en, de, zh). You also have the option to identify the regional dialect (en-gb, en-us, en-au) of a locale-specific URL. Add <link rel="alternate" hreflang="lang_code"... > elements to each page header to tell Google all of the language and region variants of that page. Use x-default to match any language not explicitly listed by the hreflang tag on the page.

<head> <title>A page on my site</title> <link rel="alternate" hreflang="en-gb" href="https://www.my-site.com/en-gb/page" /> <link rel="alternate" hreflang="en-us" href="https://www.my-site.com/en-us/page" /> <link rel="alternate" hreflang="en" href="https://www.my-site.com/en/page" /> <link rel="alternate" hreflang="de" href="https://www.my-site.com/de/page" /> <link rel="alternate" hreflang="x-default" href="https://www.my-site.com/" /> </head>

The alternate method to using the hreflang annotations is customizing the Sitemap to tell the search engines about all of the language and region variants for each URL.

Sitemap rules for multilingual websites:

- Specify the xhtml namespace: xmlns:xhtml=”http://www.w3.org/1999/xhtml”

- Create a separate <url> element for each URL

- Each <url> element must include a <loc> child indicating the page URL

- Each <url> element must have a child element <xhtml:link rel=”alternate” hreflang=”supported_language-code”> that lists every alternate version of the page, including itself.

<?xml version="1.0" encoding="UTF-8"?> <urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9" xmlns:xhtml="http://www.w3.org/1999/xhtml"> <url> <loc>https://www.my-site.com/en/page</loc> <xhtml:link rel="alternate" hreflang="de" href="https://www.my-site.com/de/page"/> <xhtml:link rel="alternate" hreflang="en" href="https://www.my-site.com/en/page"/> </url> <url> <loc>https://www.my-site.com/de/page</loc> <xhtml:link rel="alternate" hreflang="de" href="https://www.my-site.com/de/page"/> <xhtml:link rel="alternate" hreflang="en" href="https://www.my-site.com/en/page"/> </url> </urlset>

Learn more about optimizing multilingual websites for search engines here.

Leverage Structured Data for Better SERP Presence

Structured data provides explicit clues about the meaning of a page to search engines. It is coded using the in-page markup (JSON-LD, Microdata and RDFa) on the page that the information applies to. It can be used to enable special search result features and enhancements, like breadcrumbs, carousels, social profiles links, recipes, articles, etc.

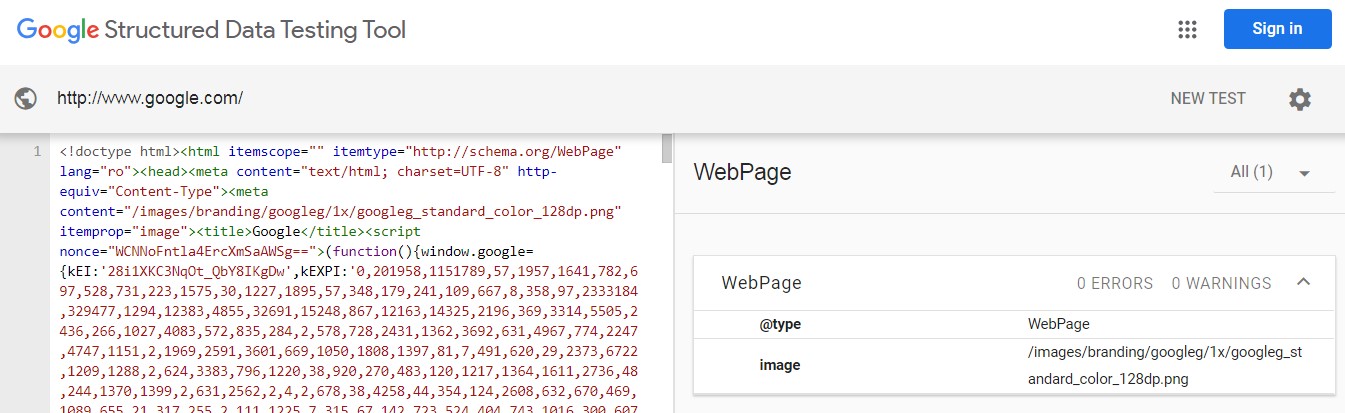

Content types that qualify to appear in rich results include Article, Local Business, Music, Recipe, Video, and much more, and must be bound to the following guidelines. The compliance with technical guidelines can be tested using the Structured Data Testing Tool.

While the vast majority of Structured data is designed to render on Desktop and Mobile devices alike, the Software App (BETA) markup can be coded in the body of a web page to better display your app details in mobile search results.

Develop for Mobile with SEO in Mind

Google recommends responsive web design (RWD) for all websites. It serves the same HTML code on the same URL regardless of the user’s device (desktop, tablet, mobile, non-visual browser), but can render the display differently based on the screen size. This makes RWD superior to serving separate mobile URLs and Dynamic Serving, and it can be free when selecting a responsive template or theme for the website.

With the introduction of Mobile-first indexing, developing for mobile with SEO in mind is more important than ever.

Some of the most common mistakes to avoid when developing for mobile include:

- Blocking JavaScript, CSS, and Image Files – always allow search engine bots access to the JavaScript, CSS, and image files used by the website

- Using unplayable content, such as Flash or other players that are not supported on mobile devices – use HTML5 instead

- Using fixed-width viewports, causing the page not to scale well for all device sizes – use the meta viewport tag

<meta name="viewport" content="width=device-width, initial-scale=1.0">

- Using small font size & touch elements too close – the minimum recommended touch target size is around 48 device independent pixels on a site with a properly set mobile viewport (see above). Touch targets must also be spaced about 8 pixels apart, both horizontally and vertically

- Using popups that partially or completely cover the contents of the page – use simple banners coded inline with the page’s content instead

- Overlooking page load speed – use Google’s PageSpeed Insights to discover any issues that can slow pages down, and address all optimizations recommended in the “Opportunities” sub-section

Safeguard SEO Rankings by Optimizing Page Speed

In 2018, Google announced that page speed will be a ranking factor for mobile searches, with the caveat that the update will only affect pages that deliver the slowest experience to users.

Google’s recommended tool for evaluating a page’s performance on both mobile and desktop devices is PageSpeed Insights.

PageSpeed Insights provides lab data useful for debugging performance issues, and field data, useful for capturing true, real-world user experience about the analyzed page. It then renders information on Opportunities, Diagnostics and Passed Audits, with the Opportunities section providing a set of recommendations designed to speed up page load by optimizing source codes (HTML, CSS, JavaScript), server response time (such as TTFB), images and more.

Optimizing for Search Engines is Optimizing for Users

This SEO guide was written by an SEO expert working with web developers on a daily basis, and it’s intended to address some of their most frequent questions and comments: “What does coding have to do with SEO?”, “This will cost us x hours in development, do you really need this?”, “I thought SEO was dead” :)) etc.

No, SEO is not dead; it’s just transforming, putting a greater emphasis on UX. Technical SEO is where web development skills intersect with Search Engine Optimization best practices, improving the performance of the site for the greater good: excellent user experience.